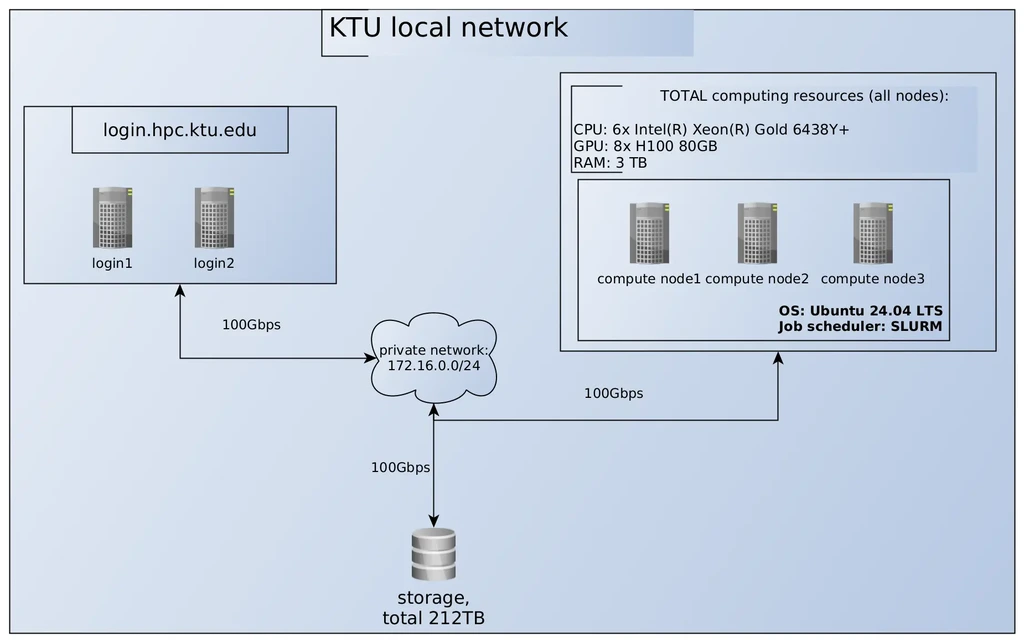

Fig. 1 HPC Cluster Diagram

| Login | login.hpc.ktu.edu (automatically redirects to login1 or login2) |

| Number of Nodes | 3 |

| User Storage | 1 TB (contact hpc@ktu.edu for specific requirements) |

| CPU (login node) | 8 cores (not intended for code compilation) |

| Compute nodes | 3 units, each: |

| · CPU | 2 x 32 cores ( Intel(R) Xeon(R) Gold 6438Y+) |

| · GPU | 2 x H100 80GB (node 3 with 4 x H100) |

| Operational system | Ubuntu 24.04 LTS |

| Network | 2 x 100 Gbps between nodes |

Table 1. General Cluster Information

The cluster consists of three compute servers (2x Intel(R) Xeon(R) Gold 6438Y+; 1024 GB; 2 x GPU NVIDIA H100 80GB [3 nodes with 4 x GPU NVIDIA H100 80GB each]) and utilizes individual home storage with the CephFS file system.

The HPC servers run the Ubuntu 24.04 LTS operating system, equipped with core drivers and the SLURM workload manager. Each user can install the necessary software in their home directory and use SLURM to submit compute jobs to the HPC nodes.

To access AI HPC services, please contact hpc@ktu.edu, providing a preliminary estimate of the resource requirements and intended usage duration.